Titan X Gpu Deep Learning

Intel’s evaluation of various emerging DNNs on two generations of FPGAs (Intel Arria 10 and Intel Stratix 10) and the latest Titan X GPU shows that current trends in DNN algorithms may favor FPGAs, and that FPGAs may even offer superior performance.

Titan x gpu deep learning. Detailed part list in the link above, but below are the specific things I got for deep learning application, GPU I went with titan X (maxwell) GPU I was fortunate enough to get NVIDIA’s GPU. NVIDIA TITAN X with Pascal When tackling deep learning challenges, nothing short of ultimate performance will get the job done The latest Titan X is the first GPU to consider The Titan X with Pascal is built atop NVIDIA’s new upsized GP102 architecture. Graphics processing units (GPUs), originally developed for accelerating graphics processing, can dramatically speed up computational processes for deep learning They are an essential part of a modern artificial intelligence infrastructure, and new GPUs have been developed and optimized specifically for deep learning.

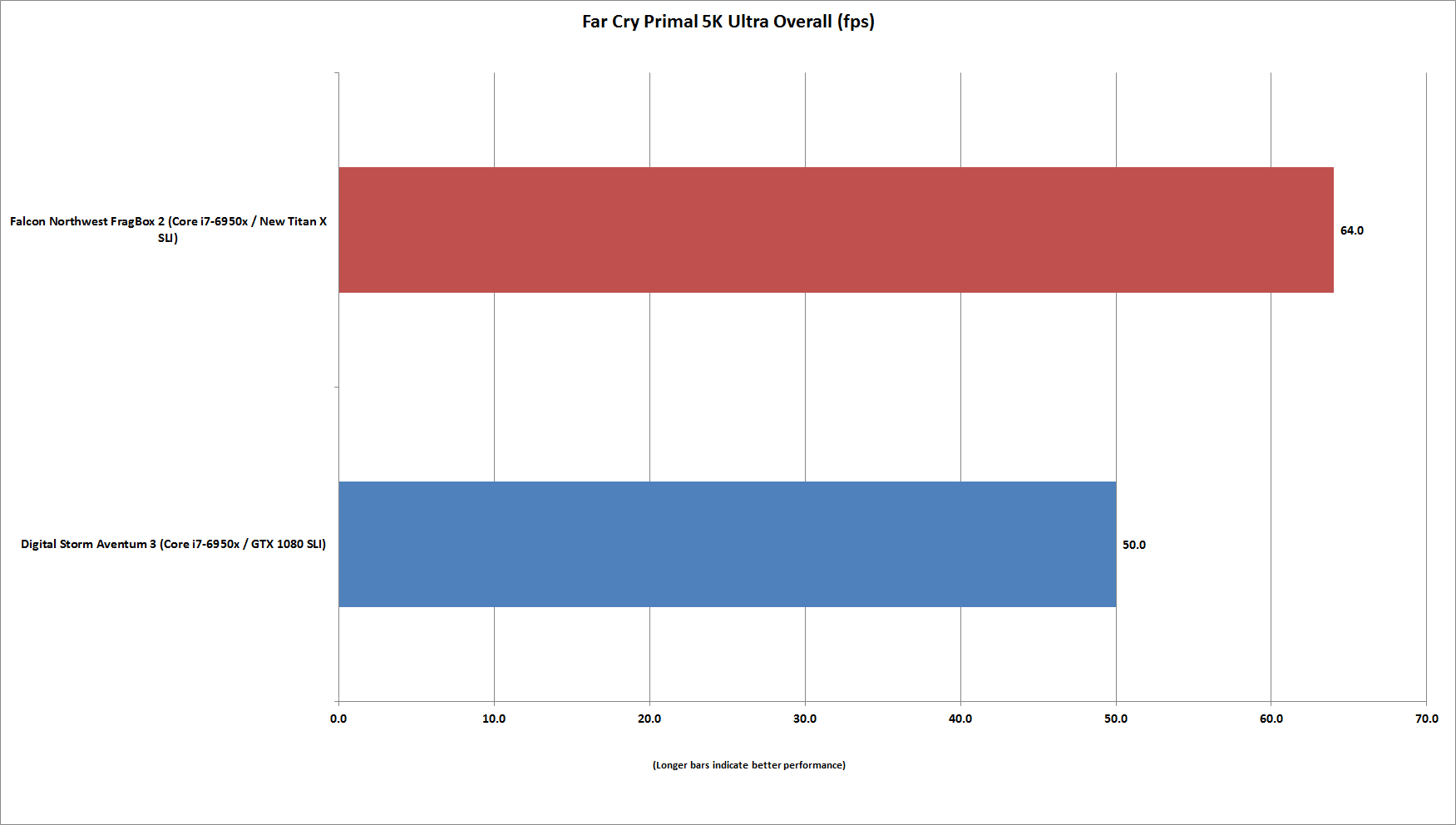

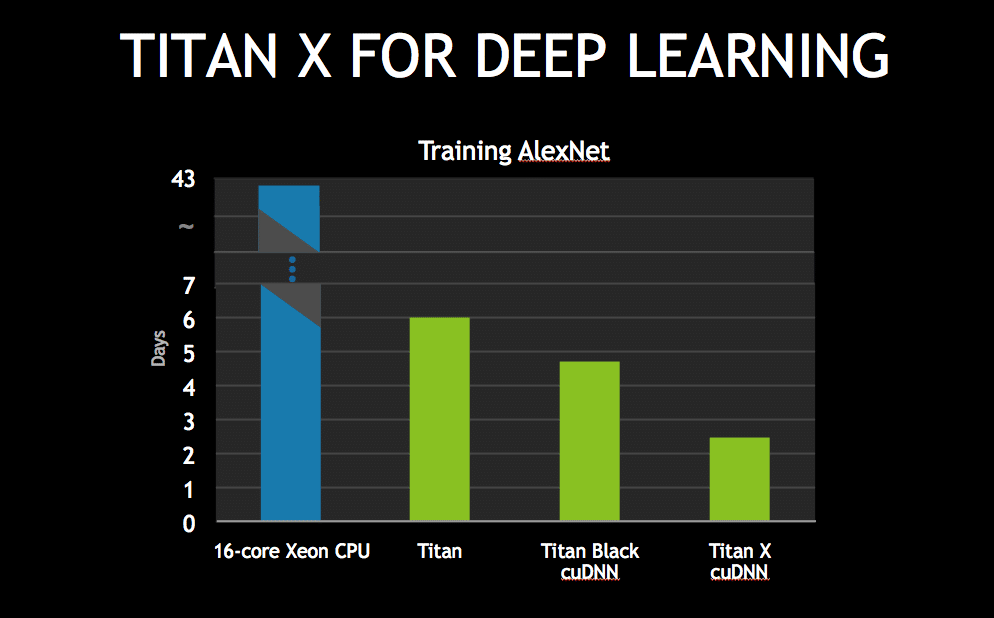

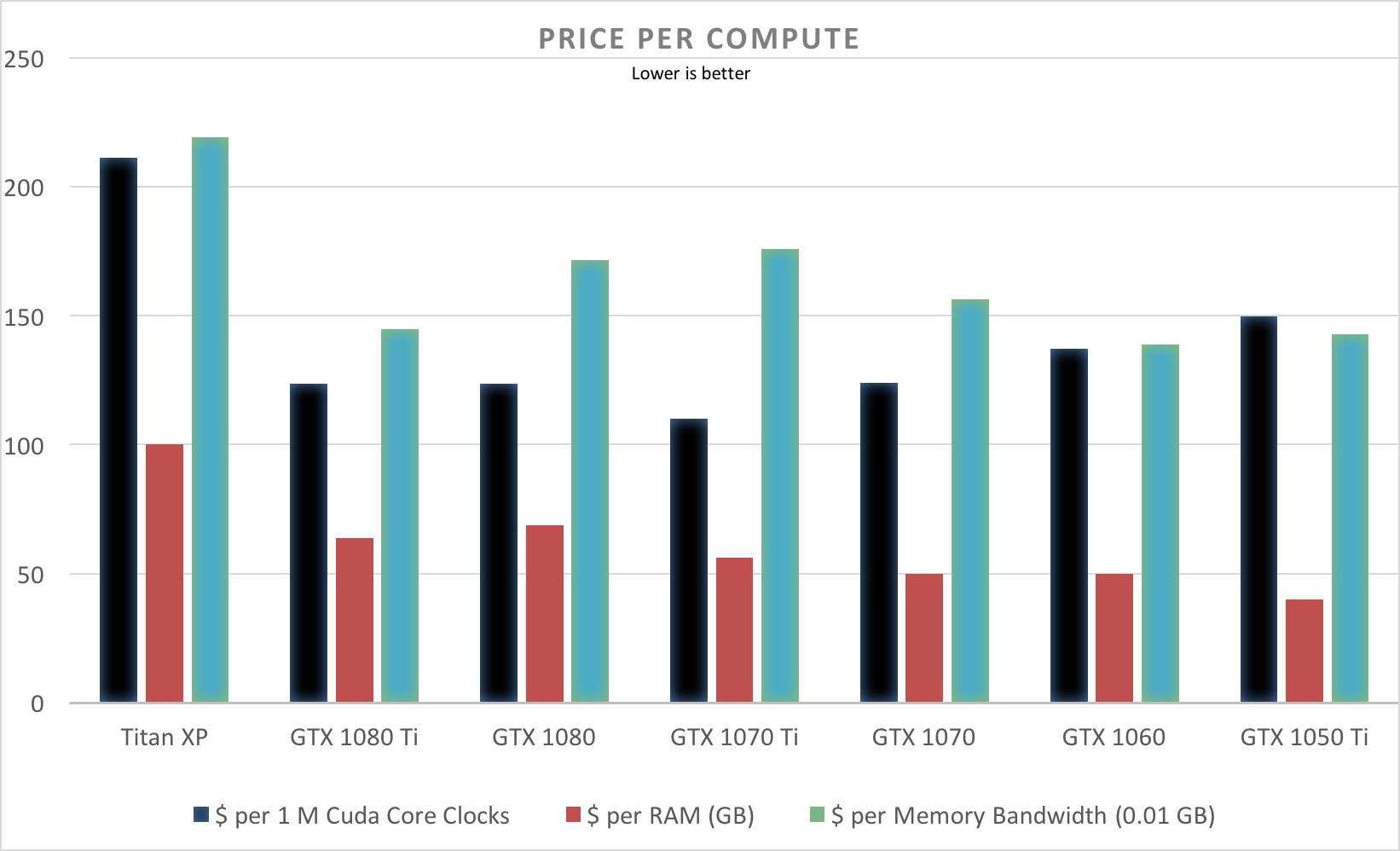

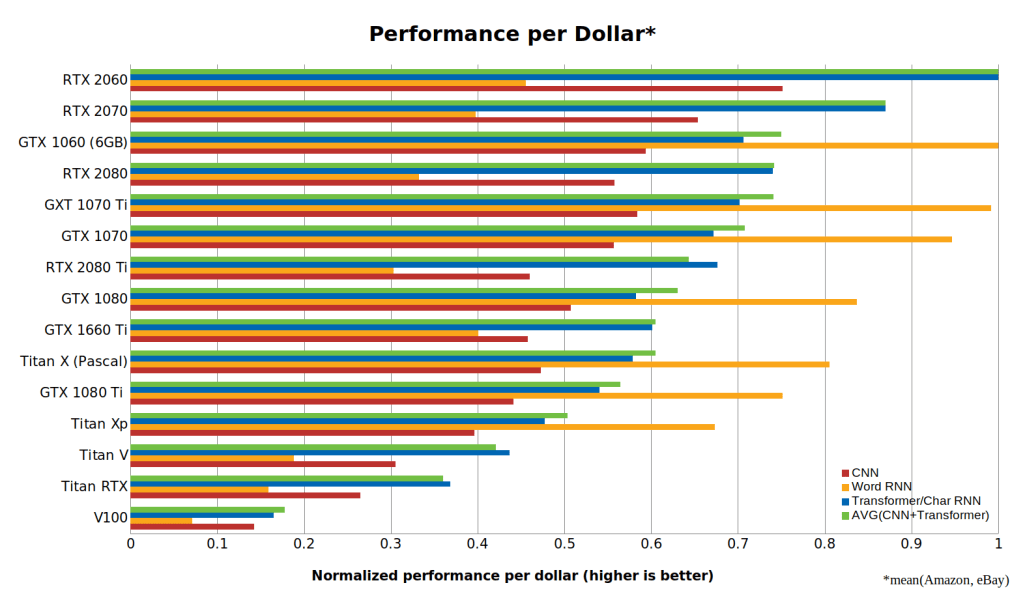

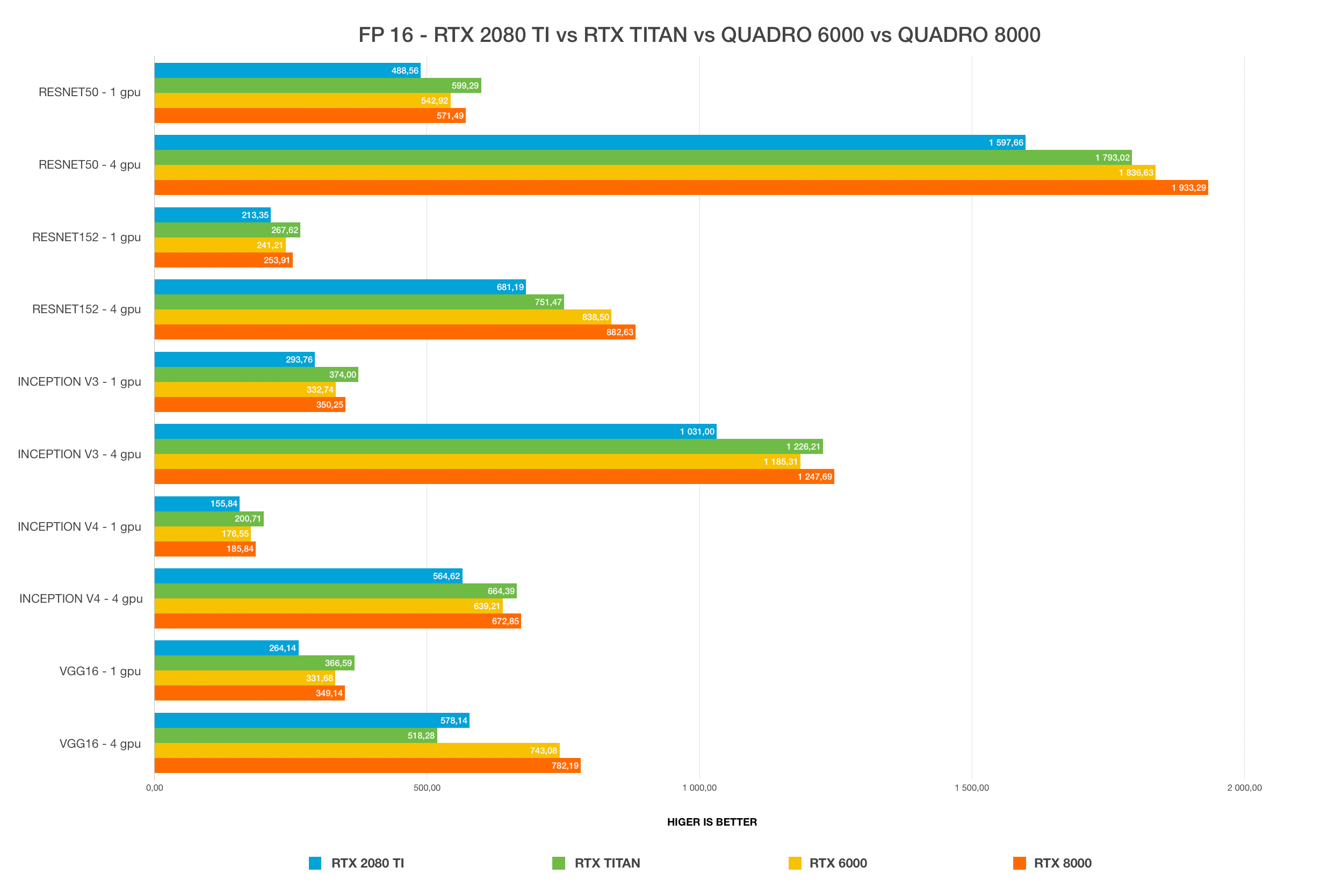

DEEP LEARNING SOFTWARE NVIDIA CUDAX AI is a complete deep learning software stack for researchers and software developers to build high performance GPUaccelerated applications for conversational AI, recommendation systems and computer vision CUDAX AI libraries deliver world leading performance for both training and inference across industry benchmarks such as MLPerf. The new Maxwellbased GeForce GTX Titan X is capable of training AlexNet for deep learning, at magnitudes faster than previous GPUs like the Titan, Titan Black and even a 16core Xeon CPU from Intel. The Titan X has slightly higher performance than the 1080 Ti However, 1080 Ti is a much better GPU from a price/performance perspective Check out this post by Lambda Labs Machine Learning GPU Benchmarks for 19 The Titan X is ~11% faster than the GTX 1080 Ti for training networks like ResNet50, ResNet152, Inception v3, and Inception v4.

The GeForce GTX TITAN X is an enthusiastclass graphics card by NVIDIA, launched in March 15 Built on the 28 nm process, and based on the GM0 graphics processor, in its GM0400A1 variant, the card supports DirectX 12 This ensures that all modern games will run on GeForce GTX TITAN X The GM0 graphics processor is a large chip with a. The GeForce GTX TITAN X is an enthusiastclass graphics card by NVIDIA, launched in March 15 Built on the 28 nm process, and based on the GM0 graphics processor, in its GM0400A1 variant, the card supports DirectX 12 This ensures that all modern games will run on GeForce GTX TITAN X. With Titan X, Nvidia is pushing hard into "deep learning," a flavor of artificial intelligence development that the company believes will be "an engine of computing innovation for areas as diverse.

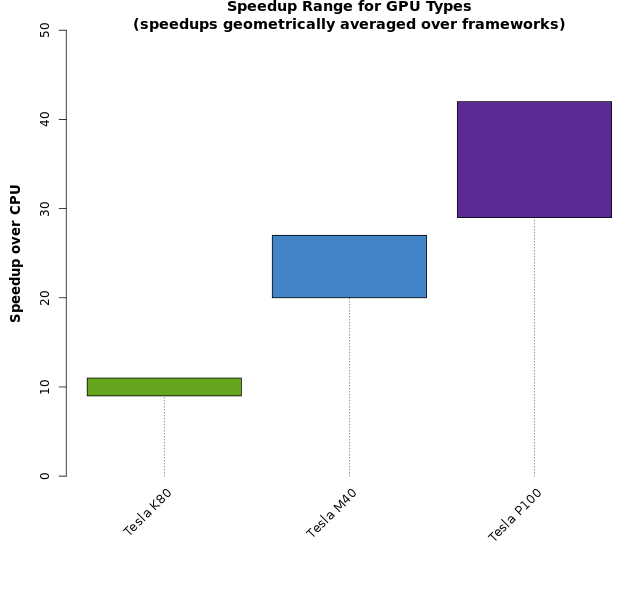

Some of these can be configured with the Nvidia Tesla K40 or now K80 GPUs, which are aimed at high performance computing, but of course, for the more price conscious, the Titan X cards are equally appealing—particularly for the deep learning training market. It’s not only a multicore CPU monster with new Intel Deep Learning Boost (Intel DL Boost), but can double as a titan of GPUcentric workloads as well All thanks to the latest, cuttingedge workstation CPU technology from Intel On the new Xeon W they added more pipes!. Which GPU(s) to Get for Deep Learning My Experience and Advice for Using GPUs in Deep Learning 0907 by Tim Dettmers 1,595 Comments Deep learning is a field with intense computational requirements, and your choice of GPU will fundamentally determine your deep learning experience.

The TITAN RTX is a good all purpose GPU for just about any deep learning task When used as a pair with the NVLink bridge you have effectively 48 GB of memory to train large models, including big transformer models for NLP. There’s been much industry debate over which NVIDIA GPU card is bestsuited for deep learning and machine learning applications At GTC 15, NVIDIA CEO and cofounder JenHsun Huang announced the release of the GeForce Titan X, touting it as “ the most powerful processor ever built for training deep neural networks”. Updated Dec 19 Sponsored message Exxact has prebuilt Deep Learning Workstations and Servers, powered by NVIDIA RTX 80 Ti, Tesla V100, TITAN RTX, RTX 8000 GPUs for training models of all sizes and file formats — starting at $5,9 If you’re looking for a fully turnkey deep learning system, preloaded with TensorFlow, Caffe, PyTorch, Keras, and all other deep learning applications.

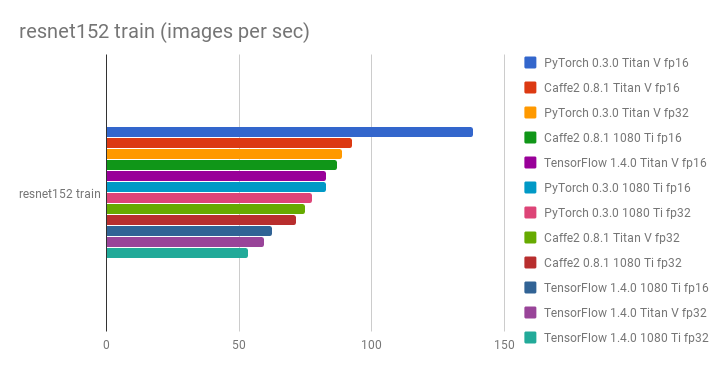

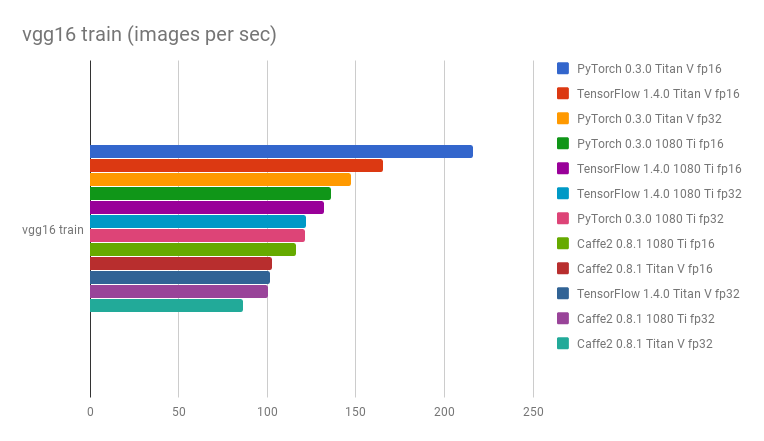

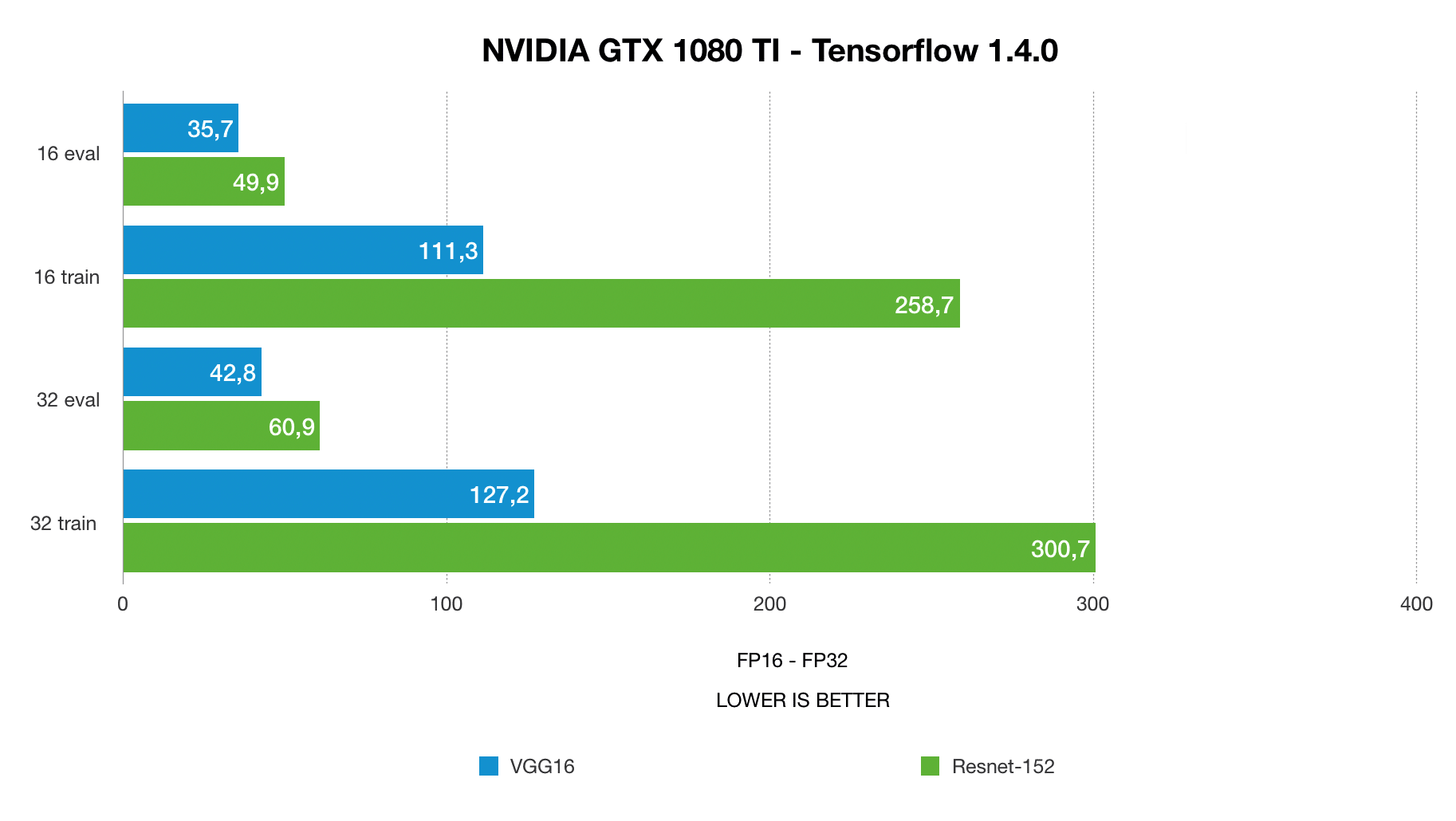

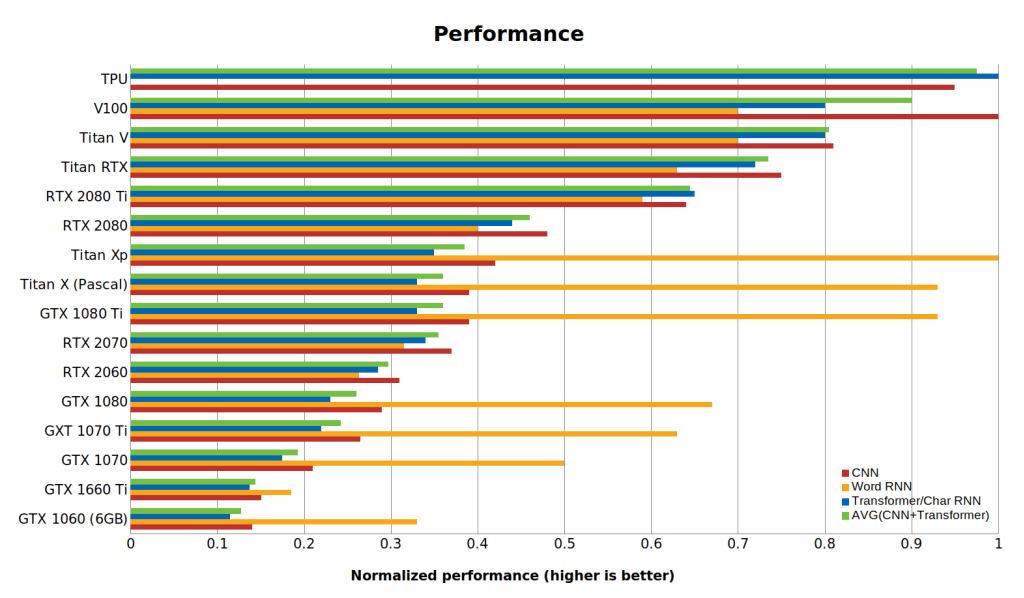

Performance Results Deep Learning Of course, Titan V remains a formidable graphics card, and it’s able to trade blows with Titan RTX using FP16 and FP32. There are two identical Nvidia GTX TITAN X on the board I can use these cards as usual However, when I run any Deep Learning programs (ex caffe, tensorflow, matconvnet) The system went crash and become black screen The output of command “nvidiasmi” as followed Unable to determine the device handle for GPU GPU is lost. Which GPU(s) to Get for Deep Learning My Experience and Advice for Using GPUs in Deep Learning 0907 by Tim Dettmers 1,595 Comments Deep learning is a field with intense computational requirements, and your choice of GPU will fundamentally determine your deep learning experience.

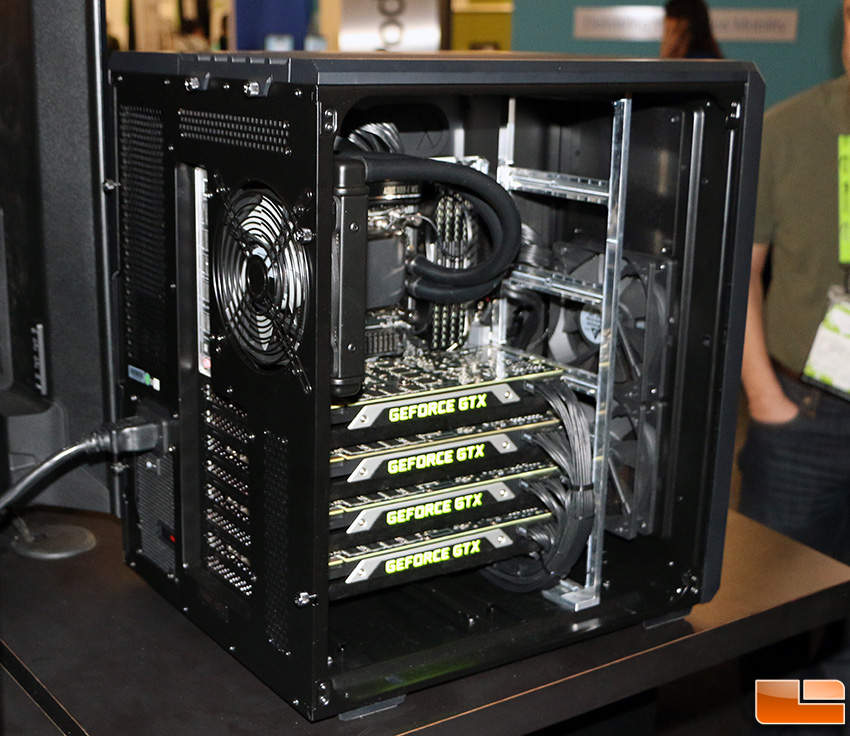

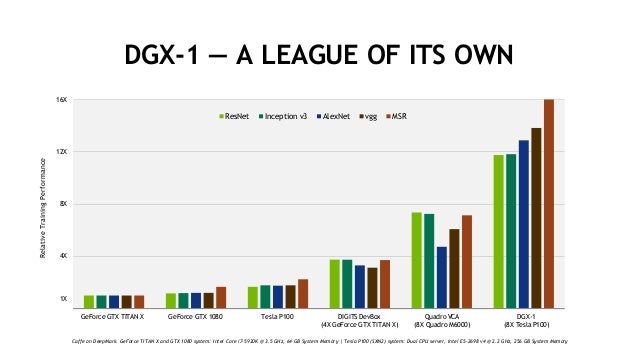

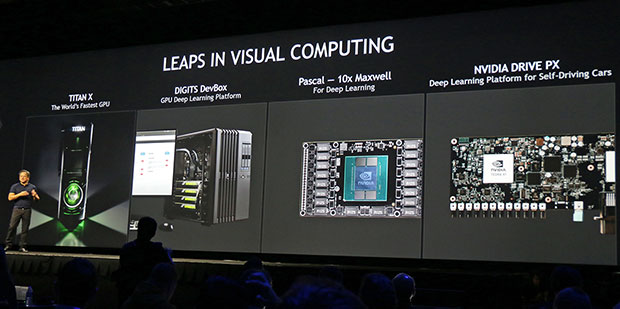

Nvidia is hoping to pack the most punch it can into a single GPU with this month's debut of Titan X, which is being touted for cuttingedge mobile gaming and deep learning alike Artificial. The new DIGITS Deep Learning GPU Training System software is an "allinone graphical system for designing, training, and validating deep neural networks for image classification," Nvidia said The DIGITS DevBox is the "world's fastest deskside deep learning machine" with four Titan X GPUs at the heart of a platform designed to accelerate deep. GPUaccelerated Deep Learning on Windows 10 native philferriere/dlwin GPUaccelerated Deep Learning on Windows 10 native philferriere/dlwin Skip to content Sign up NVIDIA GeForce Titan X, 12GB RAM Driver version / Win 10 64;.

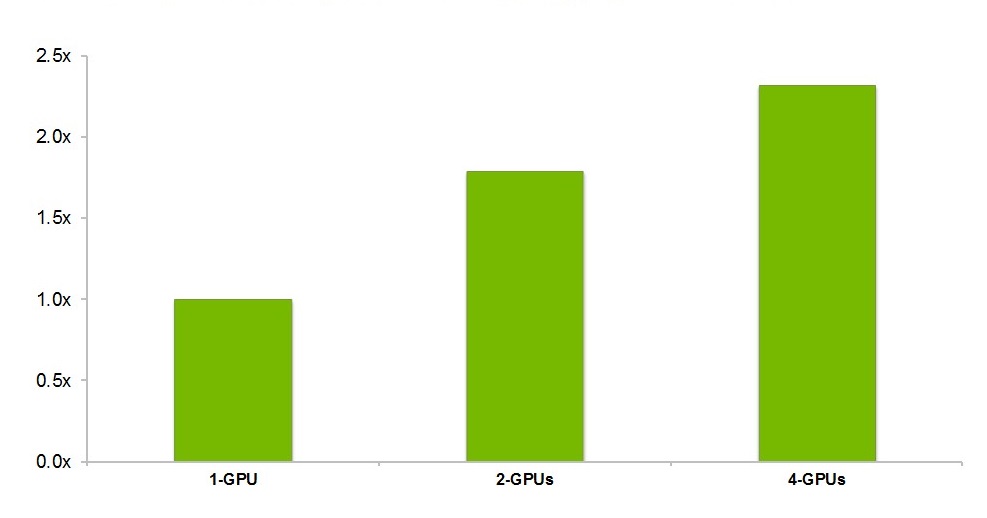

The RAPIDS tools bring to machine learning engineers the GPU processing speed improvements deep learning engineers were already familiar with To make products that use machine learning we need to iterate and make sure we have solid end to end pipelines, and using GPUs to execute them will hopefully improve our outputs for the projects. Detailed part list in the link above, but below are the specific things I got for deep learning application, GPU I went with titan X (maxwell) GPU I was fortunate enough to get NVIDIA’s GPU. Very early results of multiGPU training show the DIGITS DevBox delivers almost 4X higher performance than a single TITAN X on key deep learning benchmarks Training AlexNet can be completed in only 13 hours with the DIGITS DevBox, compared to over 2 days with the best single GPU PC, or over a month with a CPUonly system.

NVIDIA TITAN X with Pascal When tackling deep learning challenges, nothing short of ultimate performance will get the job done The latest Titan X is the first GPU to consider The Titan X with Pascal is built atop NVIDIA’s new upsized GP102 architecture. NVIDIA made chips 2 architectures One is GP100 and other GP102 GP102 powers the Geforce cards and GP100 powers the Tesla cards GP100 have same FP32 performance as GP102 By the way, GP102 is TX, 1080Ti the rest of the geforce line up use cut do. The NVIDIA Titan Xp is a great card for GPU accelerated machine learning workloads and offers a noticeable improvement the Titan X Pascal card that it replaces However, for these workloads running on a workstation the GTX 1080Ti offers a much better value.

Graphics processing units (GPUs), originally developed for accelerating graphics processing, can dramatically speed up computational processes for deep learning They are an essential part of a modern artificial intelligence infrastructure, and new GPUs have been developed and optimized specifically for deep learning. To facilitate deep learning testing, we set up a Core iK (6C/12T) CPU on a Gigabyte Z370 Aorus Ultra Gaming motherboard All four of its memory slots were populated with 16GB Corsair. ASUS X99E WS/USB 31 MB, 64GB RAM, 512GB PCIe SSD, Intel Core i7 6850K CPU and the Nvidia Titan X Pascal GPUEKWB water cooling kit for both CPU and GPU.

In this post I'll be looking at some common Machine Learning (Deep Learning) application job runs on a system with 4 Titan V GPU's I will repeat the job runs at PCIe X16 and X8 I looked at this issue a couple of years ago and wrote it up in this post, PCIe X16 vs X8 for GPUs when running cuDNN and Caffe. The TITAN X is premiumpriced starting at $10, $0 more than the Maxwell TITAN X, and it is only available directly from Nvidia Besides being the world’s fastest video card, the Pascal TITAN X is also a hybrid card like the Maxwell version that are wellsuited for Single Precision (SP) and Deep Learning compute programs. As of February 8, 19, the NVIDIA RTX 80 Ti is the best GPU for deep learning research on a single GPU system running TensorFlow A typical single GPU system with this GPU will be 37% faster than the 1080 Ti with FP32, 62% faster with FP16, and 25% more expensive.

Deep Learning Benchmarks Comparison 19 RTX 80 Ti vs TITAN RTX vs RTX 6000 vs RTX 8000 Selecting the Right GPU for your Needs Read the post » Advantages of OnPremises Deep Learning and the Hidden Costs of Cloud Computing. The new Maxwellbased GeForce GTX Titan X is capable of training AlexNet for deep learning, at magnitudes faster than previous GPUs like the Titan, Titan Black and even a 16core Xeon CPU from Intel. NVIDIA GeForce GTX 1080 Ti, 11GB RAM.

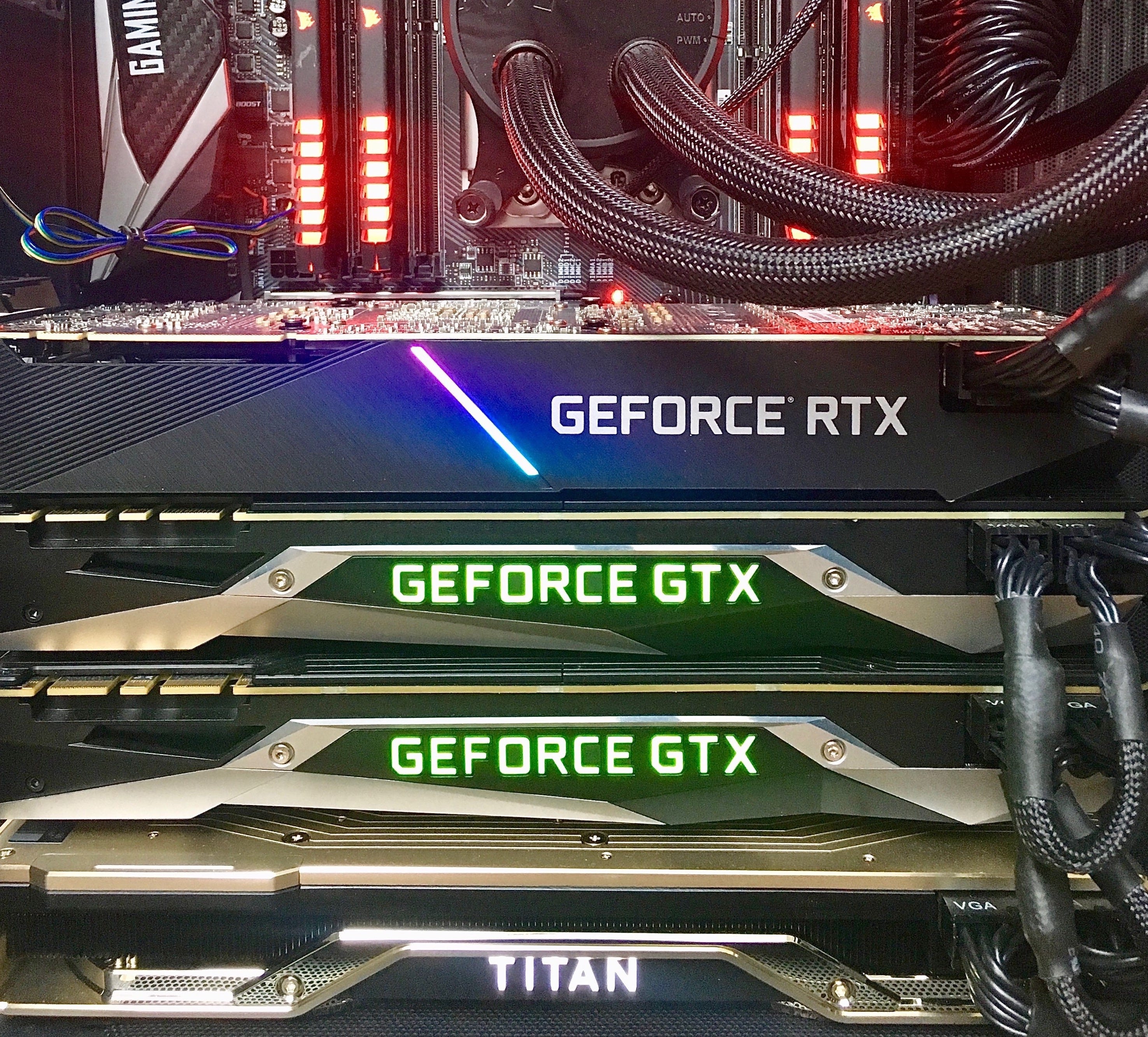

My Deep Learning computer with 4 GPUs — one Titan RTX, two 1080 Ti and one 80 Ti The Titan RTX must be mounted on the bottom because the fan is not blower style Benchmarking your Deep Learning. Intel’s evaluation of various emerging DNNs on two generations of FPGAs (Intel Arria 10 and Intel Stratix 10) and the latest Titan X GPU shows that current trends in DNN algorithms may favor FPGAs, and that FPGAs may even offer superior performance. I am planning to build a very high end deep learning machine with as many Xeon or i7 CPU cores and as many Titan X GPU cards as possible on a single motherboard So what is the maximum limit for th.

I building an 8 x Nvidia GTX Titan X GPU box for my Research in Deep learning for Algo trading but i decided to change it a bit to 4 x Nvidia GTX Titan X plus 4 x Nvidia GTX Z in order to accomodate some mathematical calculations using Double precision. Perhaps the most important attribute to look at for deep learning is the available RAM on the card If TensorFlow can’t fit the model and the current batch of training data into the GPU’s RAM it will fail over to the CPU—making the GPU pointless Another key consideration is the architecture of the graphics card. For example, the Pascal Titan X is twice the speed of a Maxwell Titan X according to this benchmark Most of the papers on machine learning use the TITAN X card, which is fantastic but costs at least $1,000, even for an older version.

Update for June I recommend Lambda’s post Choosing the Best GPU for Deep Learning in The RTX 80 Ti is the best GPU for deep learning for almost everyone Despite being a branded as a consumergrade “gaming card”, it remains the work. Deep learning prowess is the calling card of the Titan V and of Volta in general, and that performance is what we will be investigating today. TITAN RTX powers AI, machine learning, and creative workflows The most demanding users need the best tools TITAN RTX is built on NVIDIA’s Turing GPU architecture and includes the latest Tensor Core and RT Core technology for accelerating AI and ray tracing.

Nvidia GeForce RTX 80 Super Founders Edition is the mostpowerful GPU ever released This GPU is built for deep learning Powered by the NVIDIA RTX 80 Super MaxQ GPU It comes with Preinstalled with TensorFlow, PyTorch, Keras, CUDA, and cuDNN and more. Deep Learning AZ 🔗 Ingredient List Lenovo X1 Carbon 5th gen (Supports Thunderbolt 3) Akitio Node (External GPU case to mount TITAN X in Supports Thunderbolt 3) Ubuntu 1604 (64bit) Nvidia GeForce GTX TITAN X graphics card NVIDIALinuxx86_64–run. The TITAN RTX is a good all purpose GPU for just about any deep learning task When used as a pair with the NVLink bridge you have effectively 48 GB of memory to train large models, including big transformer models for NLP The twin fan design may hamper dense system configurations As a plus, qualifying EDU discounts are available on TITAN RTX.

A Novel FPGA Accelerator Design for RealTime and UltraLow Power Deep Convolutional Neural Networks Compared With Titan X GPU Abstract Convolutional neural networks (CNNs) based deep learning algorithms require high data flow and computational intensity For realtime industrial applications, they need to overcome challenges such as high data. Very early results of multiGPU training show the DIGITS DevBox delivers almost 4X higher performance than a single TITAN X on key deep learning benchmarks Training AlexNet can be completed in only 13 hours with the DIGITS DevBox, compared to over 2 days with the best single GPU PC, or over a month with a CPUonly system. Deep learning is one of the fastestgrowing segments of the machine learning or artificial intelligence field and a key area of innovation in computing With researchers creating new deep learning algorithms and industries producing and collecting unprecedented amounts of data, computational capability is the key to unlocking insights from dataIn the pursuit of continuing.

深度学习 深度学习是 ai 和机器学习的一个分支,其使用多层人工神经网络精准完成物体检测、语音识别、语言翻译等任务. Deep Learning Workstation PC with GTX Titan Vs Server with NVIDIA Tesla V100 Vs Cloud Instance Selection of Workstation for Deep learning GPU GPU’s are the heart of Deep learning Computation involved in Deep Learning are Matrix operations running in parallel operations Best GPU overall NVidia Titan Xp, GTX Titan X (Maxwell. The difference between the architectures really matters for speed;.

TITAN X is Nvidia’s new flagship GeForce gaming GPU, but it’s also uniquely suited for deep learning NVIDIA CEO and cofounder JenHsun Huang showcased three new technologies that will fuel deep learning during his opening keynote address to the 4,000 attendees of the GPU Technology Conference. Alhanai and her team combined the processing power of a cluster of machines running more than 40 NVIDIA TITAN X GPUs with the TensorFlow, Keras and cuDNN deep learning libraries, and set to work training their model. GeForce GTX TITAN X is the ultimate graphics card It combines the latest technologies and performance of the new NVIDIA Maxwell™ architecture to be the fastest, most advanced graphics card on the planet.

Sponsored message Exxact has prebuilt Deep Learning Workstations and Servers, powered by NVIDIA RTX 80 Ti, Tesla V100, TITAN RTX, RTX 8000 GPUs for training models of all sizes and file formats — starting at $5,9. RTX 60 Vs GTX 1080Ti Deep Learning Benchmarks Cheapest RTX card Vs Most Expensive GTX card Less than a year ago, with its GP102 chip 3584 CUDA Cores 11GB of VRAM, the GTX 1080Ti was the apex GPU of lastgen Nvidia Pascal range (bar the Titan editions) The demand was so high that retail prices often exceeded $900, way above the. The TITAN RTX is a good all purpose GPU for just about any deep learning task When used as a pair with the NVLink bridge you have effectively 48 GB of memory to train large models, including big transformer models for NLP The twin fan design may hamper dense system configurations As a plus, qualifying EDU discounts are available on TITAN RTX.

RTX 80 Ti is an excellent GPU for deep learning, offering a fantastic performance/price ratio The main limitation is the VRAM size Training on an RTX 80 Ti will require small batch sizes, and you will not be able to train large models in some cases Using an NVLINk will not combine the VRAM of multiple GPUs, unlike TITANs or Quadros.

Github U39kun Deep Learning Benchmark Deep Learning Benchmark For Comparing The Performance Of Dl Frameworks Gpus And Single Vs Half Precision

Hardware For Deep Learning Part 3 Gpu By Grigory Sapunov Intento

Nvidia Titanx 12g Pascal Titan X Pascal Scientific Computing Deep Learning Game Rendering Graphics Card Graphics Cards Aliexpress

Titan X Gpu Deep Learning のギャラリー

Can Fpgas Beat Gpus In Accelerating Next Generation Deep Learning

Nvidia Digits Devbox Promotes Deep Learning W Titan X Legit Reviews

The Latest Deep Learning Challenge By Nvidia Titan X

Deep Learning Nvidia Gtx Titan X Versus Gtx 750 Ti Youtube

A Shallow Dive Into Tensor Cores The Nvidia Titan V Deep Learning Deep Dive It S All About The Tensor Cores

Github U39kun Deep Learning Benchmark Deep Learning Benchmark For Comparing The Performance Of Dl Frameworks Gpus And Single Vs Half Precision

Why Your Personal Deep Learning Computer Can Be Faster Than Aws And Gcp By Jeff Chen Mission Org Medium

Nvidia S Monstrous Titan X Pascal Gpu Stomps Onto The Scene Pcworld

Q Tbn And9gcthleziaflrlo9 Kkyy5rrrsogvbu5ues1swpzvpdlggmv3wgzu Usqp Cau

Early Experiences With Deep Learning On A Laptop With Nvidia Gtx 1070 Gpu Part 1 Amund Tveit S Blog

The Best Gpus For Deep Learning In An In Depth Analysis

Deep Learning Benchmarks Comparison 19 Rtx 80 Ti Vs Titan Rtx Vs Rtx 6000 Vs Rtx 8000 Selecting The Right Gpu For Your Needs Exxact

1080 Ti Vs Rtx 80 Ti Vs Titan Rtx Deep Learning Benchmarks With Tensorflow 18 19 Bizon Custom Workstation Computers Best Workstation Pcs For Ai Deep Learning Video Editing

Titan Rtx Benchmarks For Deep Learning In Tensorflow 19 Xla Fp16 Fp32 Nvlink Exxact

Titan Rtx Deep Learning Benchmarks

Nvidia Geforce Gtx Titan X Review Pcmag

18 15 Macbook Pro 8th 6c H Rtx Titan 32gbps Tb3 Razer Core X Win10 1909 Airmc18 External Gpu Builds

Pascal Titan X Vs The Gtx 1080 First Benchmarks Revealed Babeltechreviews

Gpu Deep Learning Garden

Nvidia Digits Devbox Promotes Deep Learning W Titan X Legit Reviews

The Battle Of The Titans Pascal Titan X Vs Maxwell Titan X Babeltechreviews

Some Experiences And Suggestions On How To Choose A Suitable Gpu Card In Deep Learning Programmer Sought

Nvidia Geforce Gtx Titan X Pascal Graphics Card Announced

Performance Results Deep Learning Nvidia Titan Rtx Review Gaming Training Inferencing Pro Viz Oh My Tom S Hardware

Nvidia Ceo Talks Titan X Next Gen Pascal Deep Learning And Elon Musk At Gtc 15 Hothardware

Nvidia Titan X Single Precision Is More Important

Hardmaru Guide For Building The Best 4 Gpu Deep Learning Rig For 7000 T Co J9qvoexs9h

Deep Learning And Nvidia Titan X Digits Devbox Nvidia Blog

Titan Rtx Benchmarks For Deep Learning In Tensorflow 19 Xla Fp16 Fp32 Nvlink Exxact

Rtx 80 Ti Deep Learning Benchmarks With Tensorflow 19

Nvidia Deep Learning Solutions Alex Sabatier

Picking A Gpu For Deep Learning Buyer S Guide In 19 By Slav Ivanov Slav

Why Nvidia Is Betting On Powering Deep Learning Neural Networks Hardwarezone Com Sg

Titan Xp Graphics Card With Pascal Architecture Nvidia Geforce

Is The Titan X Better Than The 1080 Ti For Deep Learning And If So Why Quora

Deep Learning On Gpus Successes And Promises

Original Nvidia Gtx Titan X Titan X 12gb Ddr5 High End Gaming Deep Learning Graphics 4k Graphics Lazada Singapore

The New Nvidia Titan X The Ultimate Period The Official Nvidia Blog

What Is Currently The Best Gpu For Deep Learning Quora

1

Nvidia Titan V Launched For Ai And Deep Learning First Gpu Based On Volta Architecture Technology News

Hardware For Deep Learning Part 3 Gpu By Grigory Sapunov Intento

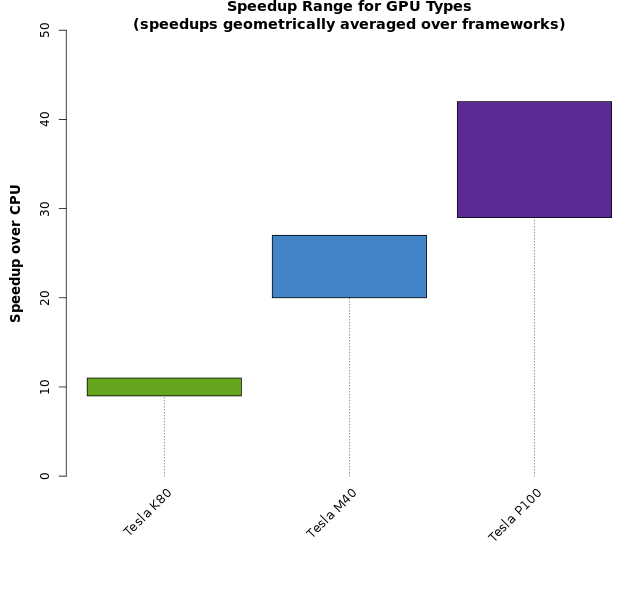

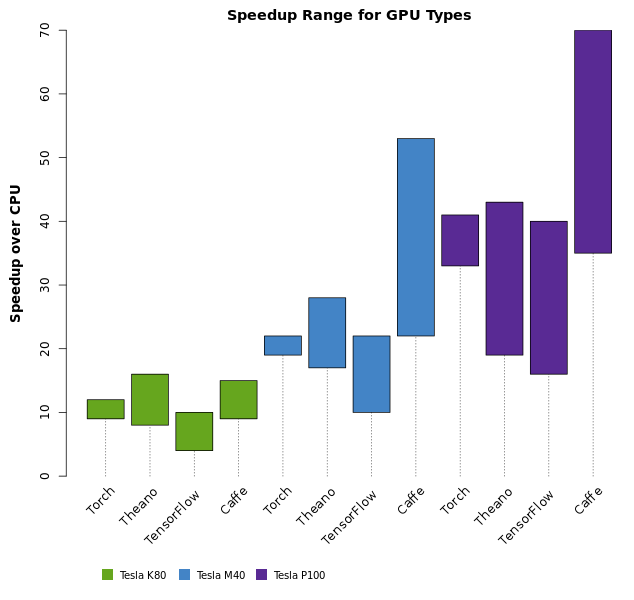

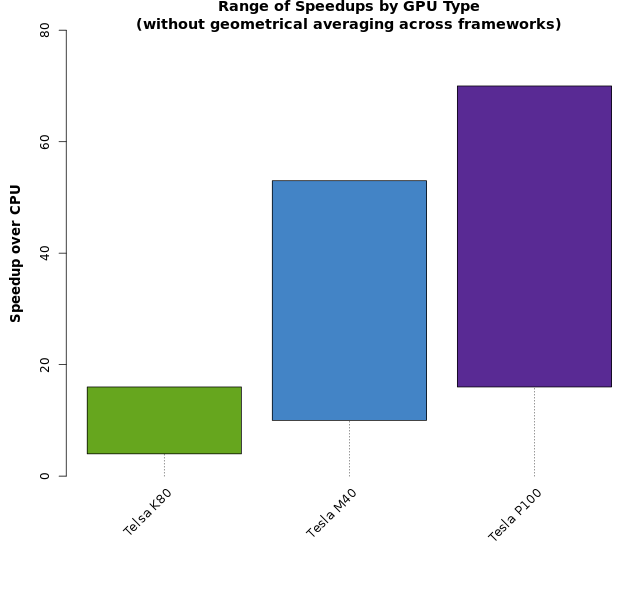

Deep Learning Benchmarks Of Nvidia Tesla P100 Pcie Tesla K80 And Tesla M40 Gpus Microway

Multi Gpu Scaling With Titan V And Tensorflow On A 4 Gpu Workstation

Nvidia Ceo Talks Titan X Next Gen Pascal Deep Learning And Elon Musk At Gtc 15 Hothardware

How To Select The Right Gpu For Deep Learning Electronics360

Deep Learning Gpu Benchmarks Tesla V100 Vs Rtx 80 Ti Vs Gtx 1080 Ti Vs Titan V

Titan V Vs 1080 Ti Head To Head Battle Of The Best Desktop Gpus On Cnns Is Titan V Worth It 110 Tflops No Brainer Right By Yusaku Sako Medium

Nvidia Deep Dives Into Deep Learning With Pascal Titan X Gpus Zdnet

Deep Learning Gpu Benchmarks Tesla V100 Vs Rtx 80 Ti Vs Gtx 1080 Ti Vs Titan V

Nvidia Unveils New Titan X Graphics Card Ai Trends

Nvidia Digits Devbox Promotes Deep Learning W Titan X Legit Reviews

Deep Learning And Nvidia Titan X Digits Devbox Nvidia Blog

Nvidia S Monstrous Titan X Pascal Gpu Stomps Onto The Scene Pcworld

Building A 5 000 Machine Learning Workstation With An Nvidia Titan Rtx And Ryzen Threadripper By Jeff Heaton Towards Data Science

Pascal Based Geforce Titan X Available From Today Benchmarks

Picking A Gpu For Deep Learning Buyer S Guide In 19 By Slav Ivanov Slav

Titanxp Vs Gtx1080ti For Machine Learning

Q Tbn And9gcryxrlmuaxrhwrqvv5psuinfhap1sdfkoih5jmyrcb98w0blpei Usqp Cau

Choose Proper Geforce Gpu S According To Your Machine Deep Learning Garden

Nvidia Aims At Graphics And Deep Learning Pcmag

Official Gtx Titan X Specs 1000 Price Unveiled At Gtc 15 Gamersnexus Gaming Pc Builds Hardware Benchmarks

Deep Learning Benchmarks Of Nvidia Tesla P100 Pcie Tesla K80 And Tesla M40 Gpus Microway

Buy Deep Learning Devbox Intel Core I9 79x 128gb Memory 2x Nvidia Titan Xp Gpu Preinstalled Ubuntu16 04 Cuda9 Tensorflow For Machine Learning Ai 2 X Nvidia Titan Xp Gpu In Cheap

Nvidia Geforce Gtx Titan X Pascal Graphics Card Announced

Rtx 60 Vs Gtx 1080ti Deep Learning Benchmarks Cheapest Rtx Card Vs Most Expensive Gtx Card By Eric Perbos Brinck Towards Data Science

Gpu Gpu Performance 및 Titan V Rtx 80 Ti Benchmark

Tensorflow Performance With 1 4 Gpus Rtx Titan 80ti 80 70 Gtx 1660ti 1070 1080ti And Titan V

Picking A Gpu For Deep Learning Buyer S Guide In 19 By Slav Ivanov Slav

Why Your Personal Deep Learning Computer Can Be Faster Than Aws And Gcp By Jeff Chen Mission Org Medium

Hpe Launches New Gpu Packed Machines For Deep Learning Workloads Siliconangle

Gpu Gpu Performance 및 Titan V Rtx 80 Ti Benchmark

Best Gpu For Deep Learning In Rtx 80 Ti Vs Titan Rtx Vs Rtx 6000 Vs Rtx 8000 Benchmarks Bizon Custom Workstation Computers Best Workstation Pcs For Ai Deep Learning

Best Deep Learning Performance By An Nvidia Gpu Card The Winner Is Amax Blog And Insights

Best Gpu For Deep Learning In Rtx 80 Ti Vs Titan Rtx Vs Rtx 6000 Vs Rtx 8000 Benchmarks Bizon Custom Workstation Computers Best Workstation Pcs For Ai Deep Learning

Best Gpu S For Deep Learning 21 Updated Favouriteblog Com

Deep Learning Gpu Best Computer For Deep Learning Tooploox

D Which Gpu S To Get For Deep Learning My Experience And Advice For Using Gpus In Deep Learning Machinelearning

Nvidia Digits Devbox And Deep Learning Demonstration Gtc 15 Youtube

Nvidia Gtx Titan X Pascal Review Vs Gtx 1080 Sli 1070s Gamersnexus Gaming Pc Builds Hardware Benchmarks

The Nvidia Titan V Deep Learning Deep Dive It S All About The Tensor Cores

Nvidia Titan X Ultimate Graphics Card Unleashed

Best Deep Learning Performance By An Nvidia Gpu Card The Winner Is Amax Blog And Insights

Machine Learning Deep Learning Archives Kompulsa

Can Fpgas Beat Gpus In Accelerating Next Generation Deep Learning

Build A Machine Learning Dev Box With Nvidia Titan X Pascal By Kunfeng Chen Medium

Deep Learning Benchmarks Of Nvidia Tesla P100 Pcie Tesla K80 And Tesla M40 Gpus Microway

Nvidia Gtx Titan X 12gb In The Mac Pro 5 1 2 Titan X Gpus In One Mac Pro Most Powerful Cuda Graphics Card Ever The Ultimate Mac Pro Community

Nestlogic

Best Deep Learning Performance By An Nvidia Gpu Card The Winner Is Amax Blog And Insights

Deep Learning And Nvidia Titan X Digits Devbox Nvidia Blog

Nvidia Ceo Talks Titan X Next Gen Pascal Deep Learning And Elon Musk At Gtc 15 Hothardware

Deep Learning Benchmarks Comparison 19 Rtx 80 Ti Vs Titan Rtx Vs Rtx 6000 Vs Rtx 8000 Selecting The Right Gpu For Your Needs Exxact

Nvidia Speeds Up Deep Learning Software

What Is Currently The Best Gpu For Deep Learning Quora

Build Your Deep Learning Box Wiki Thread Part 2 Alumni 18 Deep Learning Course Forums

Rtx 80 Ti Deep Learning Benchmarks With Tensorflow 19

Training Deep Learning Models On Multi Gpus va Next Technologies

The Best Gpus For Deep Learning In An In Depth Analysis

1

Nvidia S Digits Devbox Is A Us 15 000 Deskside Deep Learning Machine Hardwarezone Com Sg